How to Spy on Competitors with Python & Data Studio by @seocounseling

Whether you’re new to SEO or a seasoned pro, investigating random [‘q’] is just part of the trade.

There are many methods to investigating why your targeted keywords may have dropped, but using Python and Data Studio together is a game changer!

A quick disclaimer: I did not create this script myself. A fellow SEO and developer, Evan from Architek, collaborated with me to solve a particular need I had. Evan was the brains behind creating the script.

I always wanted to be able to view a large set of Google results at scale, without having to conduct these searches manually one-by-one.

Evan mentioned that Python may be the ideal solution for solving my SEO investigation needs.

Why Python Is Relevant to SEO

Python is an incredibly powerful programing language that can do just about anything. One of the more common uses for Python is automating daily monotonous tasks.

One of the coolest things about Python is that there are several different ways to accomplish the same task. However, this also adds a new level of difficulty.

Most of the sample Python scripts out there can be a bit outdated, so you’ll find yourself doing a lot of trial and error.

Python has many applications for [userQuery, url[0]. The key is to have the right idea. If you have an idea to automate a task, chances are that there’s a script that can be created for it.

To stay up to date with the latest use cases for Python in SEO, Hamlet Batista has published some awesome articles here at Search Engine Journal.

What This Specific Script Does & Doesn’t Do

Most keyword ranking tools report an average ranking for a keyword over a specified timeframe. This Python script runs a single crawl at the time you run it from your IP address. This script is not meant to track keyword rankings.

The purpose of this script was to solve an issue I was having with investigating sudden drops of rankings across my client and their competition.

Most keyword ranking tools will tell you which pages your domain is ranking for, but not your competitor’s highest ranking pages per keyword.

So Why’s That Important?

In this scenario, we aren’t tacking page performance for the long-term. We’re simply trying to get quick data.

This script allows us to quickly identify trends across the organic landscape and see which pages are performing best.

What You’ll Need to Get Started

If you’re new to Python, I recommend checking out the official Python tutorial or Automate the Boring Stuff.

For this tutorial I’m using PyCharm CE, but you can use Sublime Text or whatever your preferred development environment is.

This script is written in Python 3 and may be a bit advanced for folks new to this programming language.

If you have not found an interpreter or set up your first virtual environment yet, this guide can help you get started.

Once you’re set up with a new virtual environment, you’ll need the following libraries:

- urllib

- lxml

- requests

Now that you’re all set up, let’s dive into some research together.

1. Make a List of Keywords to Investigate

We’re going to be using some sample data to investigate some keywords we’re pretending to track.

Let’s pretend that you looked at your keyword tracking software and noticed that the following keywords dropped more than five positions:

- SEO Tips

- Local SEO Advice

- Learn SEO

- Search Engine Optimization Articles

- SEO Blog

- SEO Basics

Disclaimer: *Searching for too many keywords may result in your IP getting temporarily banned. Pinging Google for this many results at once may appear spammy and will drain their resources. Use with caution and moderation.

The first thing we will do is place these keywords in a simple text file. The keywords should be separated with a line break, as shown in the screenshot below.

2. Run the Python Ranking Investigation Script

The TL;DR of this script is that it does three basic functions:

- Locates and opens your searches.txt file.

- Uses those keywords and searches the first page of Google for each result.

- Creates a new CSV file and prints the results (Keyword, URLs, and page titles).

For this script to work properly, you will need to run it in sections. First, we will need to request our libraries. Copy and paste the command below.

from urllib.parse import urlencode, urlparse, parse_qs from lxml.html import fromstring from requests import get import csv

Next, you’ll be able to input the main function of this script in a single copy/paste action. This part of the script sets the actual steps taken, but will not execute the command until the third step.

def scrape_run():

with open('searches.txt') as searches:

for search in searches:

userQuery = search

raw = get("https://www.google.com/search?q=" + userQuery).text

page = fromstring(raw)

links = page.cssselect('.r a')

csvfile = 'data.csv'

for row in links:

raw_url = row.get('href')

title = row.text_content()

if raw_url.startswith("/url?"):

url = parse_qs(urlparse(raw_url).query)['q']

csvRow = [userQuery, url[0], title]

with open(csvfile, 'a') as data:

writer = csv.writer(data)

writer.writerow(csvRow)

Now you’re ready to run the command. The final step is to copy/paste the command below and click the return key.

scrape_run()

That’s it!

3. Use Data Studio to Analyze the Results

By running this command, you may notice that a new CSV file has been created called data.csv. These are your raw results, which we’ll need for the final step.

My agency has created a handy Data Studio template for analyzing your results. In order to use this free report, you’ll need to paste your results into Google Sheets.

The page in the link above has in-depth instructions on how to set up your Data Studio report.

How to Analyze Our Results

Now that you have your new Data Studio report implemented, it’s time to make sense of all this data.

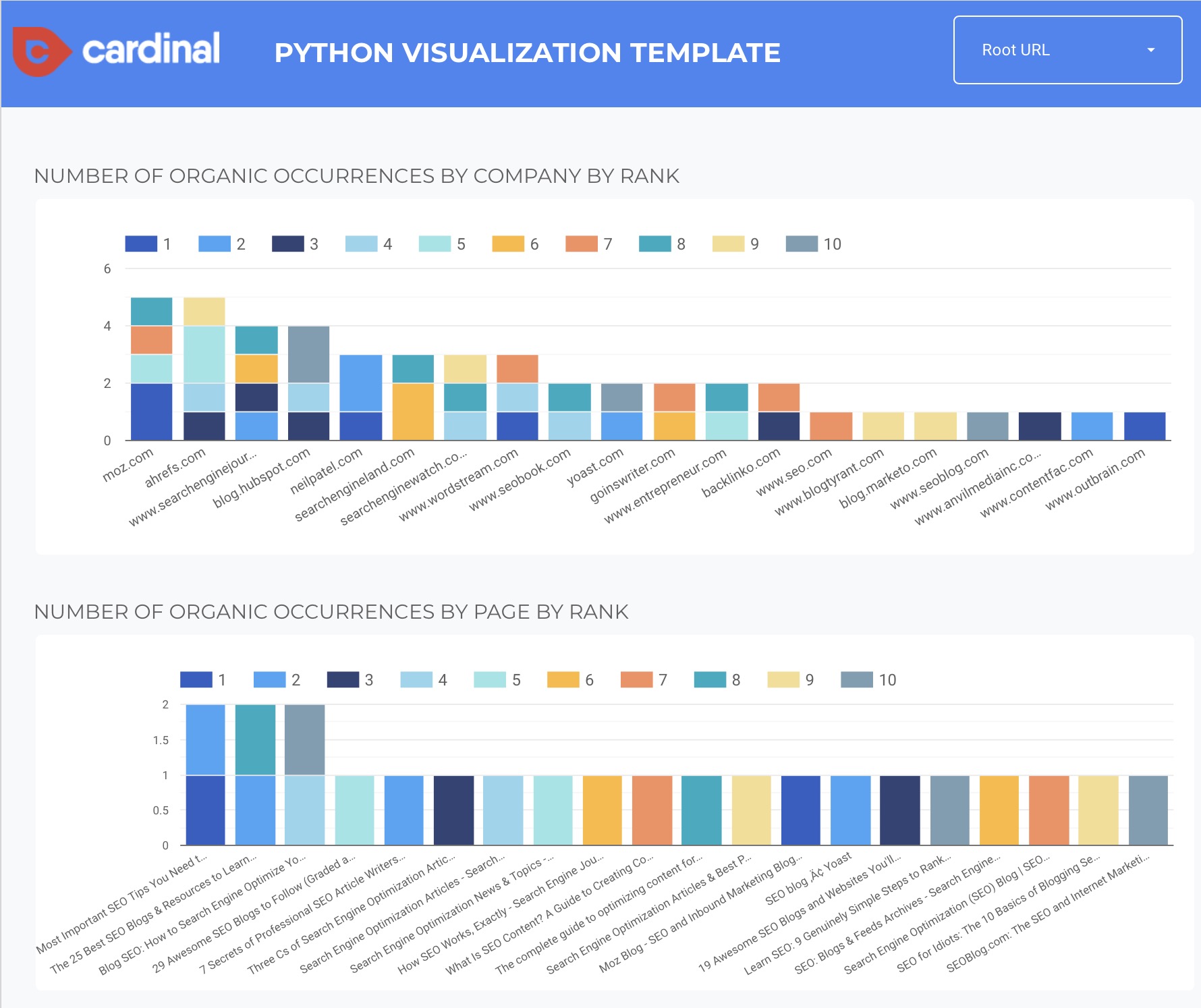

What we’re looking for are patterns. Yes, you can find patterns in the raw data, but this Data Studio template has a handy feature that allows us to quickly identify which pages are ranking the most frequently for our targeted keywords.

This is helpful, because it allows us to see which competitors are performing well, and which specific pages are performing well.

As you can see in the Data Studio screenshot above, Moz and Ahrefs are the top two competitors ranking for our searched keywords.

However, that doesn’t really help us figure out exactly what they’re doing to rank for those keywords.

That’s where the second chart comes in handy. This displays each ranking page and how many times they occur for all of our search queries. We’re quickly able to identify the top three performing pages for our keywords.

Need to filter down to a page or keyword specific level?

We’ve included filters at the top of the Data Studio template to simplify this.

data studio filters”>

data studio filters”>

Once you’ve made a list of the best-performing pages, you can conduct further on-page and off-page analysis to figure out why those pages are performing so well.

Getting Stuck?

If you’re getting stuck, reach out to the inventor of this script for tips or custom programming solutions.

What’s Your Idea?

Hopefully, this has sparked some creative ideas on how you can use Python to help automate your SEO processes.

More Resources:

Image Credits

All screenshots taken by author, May 2019