Instagram launches tools to filter out abusive DMs based on keywords and emojis, and to block people, even on new accounts

[ad_1]

Facebook and its family of apps have long grappled with the issue of how to better manage — and eradicate — bullying and other harassment on its platform, turning both to algorithms and humans in its efforts to tackle the problem better. In the latest development, today, Instagram is announcing some new tools of its own.

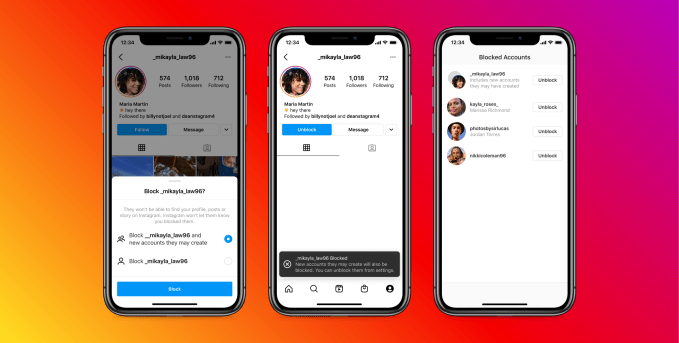

First, it’s introducing a new way for people to further shield themselves from harassment in their direct messages, specifically in message requests by way of a new set of words, phrases and emojis that might signal abusive content, which will also include common misspellings of those key terms, sometimes used to try to evade the filters. Second, it’s giving users the ability to proactively block people even if they try to contact the user in question over a new account.

The blocking account feature is going live globally in the next few weeks, Instagram said, and it confirmed to me that the feature to filter out abusive DMs will start rolling out in the UK, France, Germany, Ireland, Canada, Australia and New Zealand in a few weeks’ time before becoming available in more countries over the next few months.

Notably, these features are only being rolled out on Instagram — not Messenger, and not WhatsApp, Facebook’s other two hugely popular apps that enable direct messaging. The spokesperson confirmed that Facebook hopes to bring it to other apps in the stable later this year. (Instagram and others have regularly issued updates on single apps before considering how to roll them out more widely.)

Instagram said that the feature to filter DMs for abusive content — not scan, the company was clear to point out — will be based on a list of words and emojis that Facebook compiles with the help of anti-discrimination and anti-bullying organizations (it did not specify which), along with terms and emoji’s that you might add in yourself. And to be clear, it has to be turned on proactively, rather than being made available by default.

Why? More user license, it seems, and to keep conversations private if uses want them to be. “We want to respect peoples’ privacy and give people control over their experiences in a way that works best for them,” a spokesperson said, pointing out that this is similar to how its comment filters also work. It will live in Settings>Privacy>Hidden Words for those who will want to turn on the control.

There are a number of third-party services out there in the wild now building content moderation tools that sniff out harassment and hate speech — they include the likes of Sentropy and Hive — but what has been interesting is that the larger technology companies up to now have opted to build these tools themselves. That is also the case here, the company confirmed.

The system is completely automated, although Facebook noted that it reviews any content that gets reported. While it doesn’t keep data from those interactions, it confirmed that it will be using reported words to continue building its bigger database of terms that will trigger content getting blocked, and subsequently deleting, blocking and reporting the people who are sending it.

On the subject of those people, it’s been a long time coming that Facebook has started to get smarter on how it handles the fact that the people with really ill intent have wasted no time in building multiple accounts to pick up the slack when their primary profiles get blocked. People have been aggravated by this loophole for as long as DMs have been around, even though Facebook’s harassment policies had already prohibited people from repeatedly contacting someone who doesn’t want to hear from them, and the company had already also prohibited recidivism, which as Facebook describes it, means “if someone’s account is disabled for breaking our rules, we would remove any new accounts they create whenever we become aware of it.”

The company’s approach to Direct Messages has been something of a template for how other social media companies have built these out.

In essence, they are open-ended by default, with one inbox reserved for actual contacts, but a second one for anyone at all to contact you. While some people just ignore that second box altogether, the nature of how Instagram works and is built is for more, not less, contact with others, and that means people will use those second inboxes for their DMs more than they might, for example, delve into their spam inboxes in email.

The bigger issue continues to be a game of whack-a-mole, however, and one that not just its users are asking for more help to solve. As Facebook continues to find itself under the scrutinizing eye of regulators, harassment — and better management of it — has emerged as a very key area that it will be required to solve before others do the solving for it.

[ad_2]Source link